Kubernetes on Google Cloud (GKE)#

Google Kubernetes Engine (GKE) is the simplest and most common way of setting up a Kubernetes Cluster. You may be able to receive free credits for trying it out (though note that a free account comes with limitations). Either way, you will need to connect your credit card or other payment method to your google cloud account.

Go to console.cloud.google.com and log in.

Note

Consider setting a cloud budget for your Google Cloud account in order to make sure you don’t accidentally spend more than you wish to.

Go to and enable the Kubernetes Engine API.

Choose a terminal.

You can either to use a web based terminal or install and run the required command line interfaces on your own computer’s terminal. We recommend starting out by using the web based terminal. Choose one set of instructions below.

Use a web based terminal:

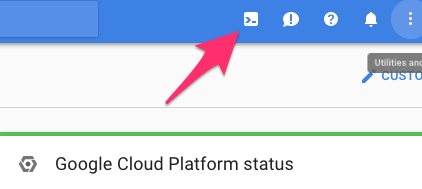

Start Google Cloud Shell from console.cloud.google.com by clicking the button shown below. You are now in control of a virtual machine with various tools preinstalled. If you save something in a user folder they will remain available to you if you return at a later stage. Additional documentation about Google Cloud shell is available here

Use your own computer’s terminal:

Download and install the

gcloudcommand line tool at its install page. It will help you create and communicate with a Kubernetes cluster.Install

kubectl(reads kube control), it is a tool for controlling Kubernetes clusters in general. From your terminal, enter:gcloud components install kubectl

Create a managed Kubernetes cluster and a default node pool.

Ask Google Cloud to create a managed Kubernetes cluster and a default node pool to get nodes from. Nodes represents hardware and a node pool will keep track of how much of a certain type of hardware that you would like.

gcloud container clusters create \ --machine-type n1-standard-2 \ --num-nodes 2 \ --zone <compute zone from the list linked below> \ --cluster-version latest \ <CLUSTERNAME>

Replace

<CLUSTERNAME>with a name that can be used to refer to this cluster in the future.--machine-typespecifies the amount of CPU and RAM in each node within this default node pool. There is a variety of types to choose from.--num-nodesspecifies how many nodes to spin up. You can change this later through the cloud console or using thegcloudcommand line tool.--zonespecifies the data center zone where your cluster will be created. You can pick something from this list that is not too far away from your users.A region in GCP is a geographical region with at least three zones, where each zone is representing a datacenter with servers etc.

A regional cluster creates pods across zones in a region(three by default), distributing Kubernetes resources across multiple zones in the region. This is different from the default cluster, which has all its resources within a single zone(as shown above).

A regional cluster has Highly Available (HA) kubernetes api-servers, this allows jupyterhub which uses them to have no downtime during upgrades of kubernetes itself.

They also increase control plane uptime to 99.95%.

To avoid tripling the number of nodes while still having HA kubernetes, the

--node-locationsflag can be used to specify a single zone to use.

To test if your cluster is initialized, run:

kubectl get node

The response should list two running nodes (or however many nodes you set with

--num-nodesabove).Give your account permissions to perform all administrative actions needed.

kubectl create clusterrolebinding cluster-admin-binding \ --clusterrole=cluster-admin \ --user=<GOOGLE-EMAIL-ACCOUNT>

Replace

<GOOGLE-EMAIL-ACCOUNT>with the exact email of the Google account you used to sign up for Google Cloud.Did you enter your email correctly? If not, you can run

kubectl delete clusterrolebinding cluster-admin-bindingand do it again.[optional] Create a node pool for users

This is an optional step, for those who want to separate user pods from “core” pods such as the Hub itself and others. See Optimizations for details on using a dedicated user node pool.

The nodes in this node pool are for the users only. The node pool has autoscaling enabled along with a lower and an upper scaling limit. This means that the amount of nodes is automatically adjusted along with the amount of users scheduled.

The

n1-standard-2machine type has 2 CPUs and 7.5 GB of RAM each of which about 0.2 CPU will be requested by system pods. It is a suitable choice for a free account that has a limit on a total of 8 CPU cores.Note that the node pool is tainted. Only user pods that are configured with a toleration for this taint can schedule on the node pool’s nodes. This is done in order to ensure the autoscaler will be able to scale down when the user pods have stopped.

gcloud beta container node-pools create user-pool \ --machine-type n1-standard-2 \ --num-nodes 0 \ --enable-autoscaling \ --min-nodes 0 \ --max-nodes 3 \ --node-labels hub.jupyter.org/node-purpose=user \ --node-taints hub.jupyter.org_dedicated=user:NoSchedule \ --zone us-central1-b \ --cluster <CLUSTERNAME>

Note

Consider adding the

--preemptibleflag to reduce the cost significantly. You cancompare the prices here <https://cloud.google.com/compute/docs/machine-types>. See thepreemptible node documentation <https://cloud.google.com/compute/docs/instances/preemptible>for more information.

Congrats. Now that you have your Kubernetes cluster running, it’s time to begin Setting up helm.